When To Call a Landing Page A/B Test

You have a great idea that needs to be tested. You’ve done the research, created the design, and are ready to go. But do you know when your test will be done? When it comes to landing pages, knowing when to call your A/B test is just as important as any other aspect of the experiment.

The Full Cup team of testing experts has developed a formula for landing page A/B success over our decades in the industry, and there are five key categories we consider before determining any results.

1. The Hypothesis + KPI

Think back to grade-school science class. What do you need before any experiment? A hypothesis! While easy to overlook, a clear and concise hypothesis keeps your test focused and helps you determine when to end it. What is the goal of your test? Is it success-specific, like growth and conversion rate lift, or educational, such as learning user behavior? Your hypothesis (and the correlating KPI) should be front of mind as you work through the calling process.

Let’s say you’ve designed a test centered around a new lead form. Your hypothesis is that this change would increase form submissions, yet during your test you notice a small dip in phone calls. Do you end the test? We say no — a test should be called based on the intended KPI. There will always be residual effects of a test, and (within reason) those should be monitored but allowed to happen while your test continues.

2. Confidence

In the context of testing, confidence can also be called statistical significance. Looking at the data, do your results meet your criteria for confidence? The standard benchmark, or significance goal, for judging test confidence is 95%.

Keep in mind high confidence alone is not a reason to call a test; it might seem promising, but it’s only one piece of the puzzle you need to consider. There are a plethora of reasons it may be too early including the length of time or the sample size. An A/B test running for a few hours, or only reaching a small audience, may appear to show statistically significant results but should be allowed to run longer.

3. Sample Size + Number of Conversions

If there’s a standard benchmark for confidence there has to be one for sample size, right? This one isn’t so easy. In our opinion, your sample size should vary based on the audience of the brand for which you’re testing. For us, it truly varies project to project — several of our brands have benchmarks of roughly 1,000 respondendents per creative variation or 50 form conversions, while for others it may be significantly more or less.

While there are formulas created to determine your ideal sample size, we believe they should be taken with a grain of salt. In our experience they often produce unrealistic requirements, so pairing those results with a common-sense approach is likely your best starting point. As you continue to test you’ll be able to determine your own ideal benchmark based on factors like past findings, traffic, and standard conversion rate.

4. Length of Test

Speaking of no standard right answer, let’s talk about the length of your test. Much like sample size, we believe that the ideal length of your test varies. It is highly dependent on how many variations of the test are running — and just to complicate things more, the sample size and confidence also impact the ideal length. Let’s break down the basics.

The larger the site traffic the smaller a test window it can support.

Extra variations increase testing time.

Days of the week can impact results, so running less than one week may skew the data.

The goal is to run your test just long enough to both reach confidence and sample size requirements while accounting for day-of-week differences. Remember to monitor the overall URL performance — if you aren’t dipping below benchmarks, you can likely keep testing if you feel you don’t have enough data. While special considerations can be made based on the scope of the test, consider these simple launch guidelines that can help easily kickoff, and wrap up, new testing programs.

5. The Effect

What happens if the life of the variants is small — as in, so small there may not be much of an effect on the page and the test is not likely to reach confidence? This is when we consider sample size and length.

A test with an incredibly minimal effect (lift or decrease) may not ever reach confidence even if it has a significant sample size, the right number of conversions, and was run for multiple weeks. In this case, we would likely call the test because the variant would have nearly no long-term impact. The results would be neutral, as the test neither harmed nor helped the performance.

That being said, if a variant is dramatically underperforming after a larger window (think a few days to one week) it might be time to phase it out. Your test may not have reached all its benchmarks but keeping it on the site may cause unnecessary harm without additional helpful findings.

What About Sales?

There is one thing missing from our list that many think of when deciding to call an A/B test: sales. The process we’ve explained above is for lead generation via landing pages, not ecommerce sales — that means sales are not the KPI in our test. On occasion, we may take offline sales (e.g. call center sales) into consideration per a client request but our norm of not calling tests based on sales is for good reason.

There are many touch points between landing page conversion and sales conversion that we don’t have in a controlled environment, such as exposure to supplementary marketing or a varied experience with a sales representative. Let’s say our test is focused on form submissions and sees a decent lift, but the call center for that brand is understaffed that week and clients don’t receive follow ups on time. Does that dip in sales mean the test was a failure? No — the problem was outside of the testing bubble and therefore cannot be counted when analyzing the test results.

A sales funnel can be incredibly long, and the offline sales acquired during a given lead generation test are only a portion of the total sales from a landing page, as some will continue to convert after the test has been called. It might sound counterintuitive to keep sales out of our equation, but throwing untracked variables into the mix can falsely impact the test results.

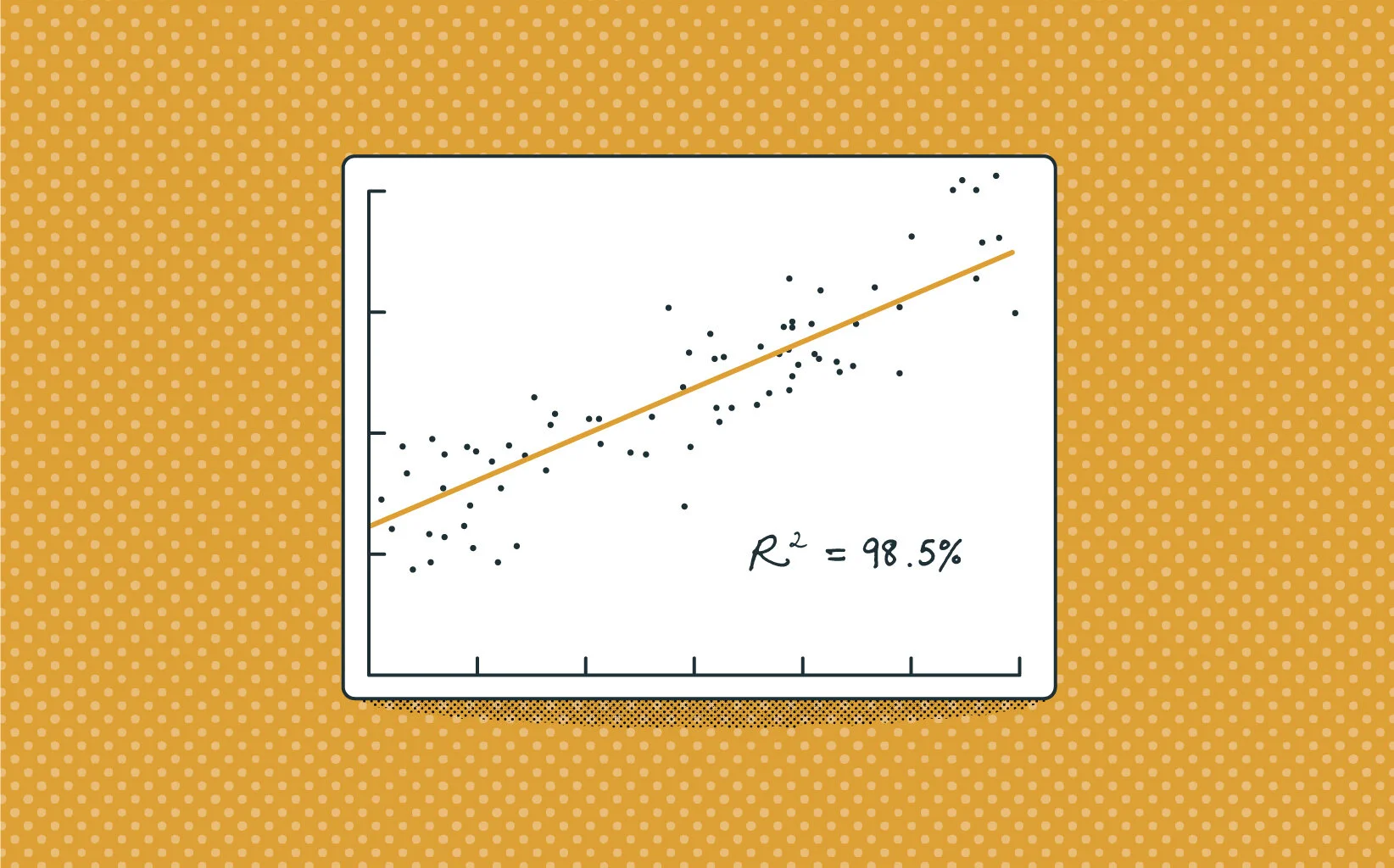

Ready to start testing? Try one of these three go-to landing page tests to start with, or find out how to best analyze your results using a regression analysis calculator.